UDF之JSON

- 1.建立bean

- 2.导入依赖

- 2.1依赖jar包

- 2.2带依赖打包

1.建立bean

package com.jxlg.hiveUDF;public class MovieRateBean {private String movie;private String rate;private String timeStamp;private String uid;//{\"movie\":\"1190\",\"rate\":\"4\",\"timeStamp\":\"987310760\",\"uid\":\"1\"}public MovieRateBean() {}public MovieRateBean(String movie, String rate, String timeStamp, String uid) {this.movie = movie;this.rate = rate;this.timeStamp = timeStamp;this.uid = uid;}public String getMovie() {return movie;}public void setMovie(String movie) {this.movie = movie;}public String getRate() {return rate;}public void setRate(String rate) {this.rate = rate;}public String getTimeStamp() {return timeStamp;}public void setTimeStamp(String timeStamp) {this.timeStamp = timeStamp;}public String getUid() {return uid;}public void setUid(String uid) {this.uid = uid;}@Overridepublic String toString() {return movie + \'\\t\' + rate + \'\\t\' + timeStamp + \'\\t\' + uid ;}}

2.导入依赖

2.1依赖jar包

<!--json--><dependency><groupId>org.apache.parquet</groupId><artifactId>parquet-hadoop</artifactId><version>1.10.0</version></dependency><!--hadoop-common--><dependency><groupId>org.apache.hadoop</groupId><artifactId>hadoop-common</artifactId><version>3.0.3</version></dependency><!--hadoop-client--><dependency><groupId>org.apache.hadoop</groupId><artifactId>hadoop-client</artifactId><version>3.0.3</version></dependency><!--hive--><dependency><groupId>org.apache.hive</groupId><artifactId>hive-exec</artifactId><version>3.1.2</version></dependency>

2.2带依赖打包

<build><plugins><plugin><groupId>org.apache.maven.plugins</groupId><artifactId>maven-compiler-plugin</artifactId><configuration><source>1.8</source><target>1.8</target></configuration></plugin><plugin><!-- JAR Maven 管理--><groupId>org.apache.maven.plugins</groupId><artifactId>maven-jar-plugin</artifactId><version>3.1.0</version></plugin><!-- maven 打包集成插件 --><plugin><artifactId>maven-assembly-plugin</artifactId><executions><execution><phase>package</phase><goals><goal>single</goal></goals></execution></executions><configuration><descriptorRefs><!-- 将依赖一起打包到 JAR --><descriptorRef>jar-with-dependencies</descriptorRef></descriptorRefs></configuration></plugin></plugins><!--自定义jar包名--><finalName>HiveUDF</finalName></build>

3.UDF编写

import com.google.common.base.Strings;import org.apache.hadoop.hive.ql.exec.UDF;import org.codehaus.jackson.map.ObjectMapper;import java.io.IOException;public class JsonUDF extends UDF {/***自定义UDF必须继承UDF*/public String evaluate(String json) {//函数名必须为evaluate//判断json字符串是否为空if(Strings.isNullOrEmpty(json))return null;//创建ObjectMapper对象ObjectMapper objMapper=new ObjectMapper();MovieRateBean mv;//实体类MovieRateBeantry {//json解析,返回实体对象MovieRateBeanmv = objMapper.readValue(json, MovieRateBean.class);return mv.toString();} catch (IOException e) {}return \"\";}}

4.打包上传jar包

使用package打包

打包得到两个jar包,画线的是带依赖的

最后上传到服务器,或者集群

5.添加jar包到hive

使用bin/hive进入hive的命令行界面

add jar /home/hdfs/target/HiveUDF-jar-with-dependencies.jar;

6.创建接受json字符串的表

源数据:

sudo vi /home/hdfs/mv.txt

{“movie”:“1193”,“rate”:“5”,“timeStamp”:“987301760”,“uid”:“1”}

{“movie”:“1192”,“rate”:“3”,“timeStamp”:“987302760”,“uid”:“1”}

{“movie”:“1191”,“rate”:“4”,“timeStamp”:“987303760”,“uid”:“1”}

{“movie”:“1194”,“rate”:“2”,“timeStamp”:“987300761”,“uid”:“1”}

{“movie”:“1195”,“rate”:“1”,“timeStamp”:“987300762”,“uid”:“1”}

{“movie”:“1196”,“rate”:“3”,“timeStamp”:“987300763”,“uid”:“1”}

{“movie”:“1197”,“rate”:“4”,“timeStamp”:“987300764”,“uid”:“1”}

{“movie”:“1198”,“rate”:“6”,“timeStamp”:“987300765”,“uid”:“1”}

{“movie”:“1199”,“rate”:“2”,“timeStamp”:“987300766”,“uid”:“1”}

{“movie”:“1190”,“rate”:“4”,“timeStamp”:“987310760”,“uid”:“1”}

创建表t_movie

create table if not exists t_movie(json string);

导入数据

load data local inpath \"/home/hdfs/mv.txt\" into table t_movie;

7.创建临时函数

create temporary function myjson as \"com.jxlg.hiveUDF.JsonUDF\";

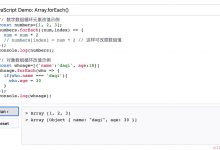

8.使用函数

select myjson(json)from t_moive;

结果如下

9.删除临时函数

drop temporary function if existson myjson;

爱站程序员基地

爱站程序员基地