rapidminer

This technical article will teach you how to pre-process data, create your own neural networks, and train and evaluate models using the US-CERT’s simulated insider threat dataset. The methods and solutions are designed for non-domain experts; particularly cyber security professionals. We will start our journey with the raw data provided by the dataset and provide examples of different pre-processing methods to get it “ready” for the AI solution to ingest. We will ultimately create models that can be re-used for additional predictions based on security events. Throughout the article, I will also point out the applicability and return on investment depending on your existing Information Security program in the enterprise.

本技术文章将教您如何使用US-CERT的模拟内部威胁数据集预处理数据,创建自己的神经网络以及训练和评估模型。 这些方法和解决方案是专为非领域专家设计的; 特别是网络安全专业人员。 我们将从数据集提供的原始数据开始我们的旅程,并提供各种预处理方法的示例,以使其“准备好”供AI解决方案提取。 我们最终将创建可基于安全事件再用于其他预测的模型。 在整篇文章中,我还将根据您企业中现有的信息安全计划指出适用性和投资回报率。

Note: To use and replicate the pre-processed data and steps we use, prepare to spend 1–2 hours on this page. Stay with me and try not to fall asleep during the data pre-processing portion. What many tutorials don’t state is that if you’re starting from scratch; data pre-processing takes up to 90% of your time when doing projects like these.

注意:要使用和复制我们使用的预处理数据和步骤,请准备在此页面上花费1-2个小时。 待在我身边,在数据预处理部分期间尽量不要入睡。 许多教程没有说明的是,如果您是从头开始的话; 进行此类项目时,数据预处理最多需要90%的时间。

At the end of this hybrid article and tutorial, you should be able to:

在本混合文章和教程的最后,您应该能够:

- Pre-process the data provided from US-CERT into an AI solution ready format (Tensorflow in particular)

将US-CERT提供的数据预处理为AI解决方案就绪格式(特别是Tensorflow)

- Use RapidMiner Studio and Tensorflow 2.0 + Keras to create and train a model using a pre-processed sample CSV dataset

使用RapidMiner Studio和Tensorflow 2.0 + Keras使用预处理的示例CSV数据集创建和训练模型

- Perform basic analysis of your data, chosen fields for AI evaluation, and understand the practicality for your organization using the methods described

使用描述的方法对数据进行基本分析,选择用于AI评估的字段并了解组织的实用性

免责声明 (Disclaimer)

The author provides these methods, insights, and recommendations *as is* and makes no claim of warranty. Please do not use the models you create in this tutorial in a production environment without sufficient tuning and analysis before making them a part of your security program.

作者*按原样*提供这些方法,见解和建议,不作任何保证。 在将其纳入安全程序之前,请不要在没有充分调整和分析的生产环境中使用在本教程中创建的模型。

工具设定 (Tools Setup)

If you wish to follow along and perform these activities yourself, please download and install the following tools from their respective locations:

如果您希望自己继续进行这些活动,请从各自的位置下载并安装以下工具:

-

Choose: To be hands on from scratch and experiment with your own variations of data: download the full dataset: ftp://ftp.sei.cmu.edu/pub/cert-data: *Caution: it is very large. Please plan to have several hundred gigs of free space

选择:要从头开始动手并尝试自己的数据变体:下载完整的数据集 : ftp : //ftp.sei.cmu.edu/pub/cert-data : *警告:它非常大。 请计划有几百个可用空间

-

Choose: If you just want to follow along execute what I’ve done, you can download the pre-processed data, Python, and solution files from my Github (click repositories and find tensorflow-insiderthreat) https://github.com/dc401/tensorflow-insiderthreat

选择:如果您只想继续执行我所做的事情,则可以从我的Github下载预处理数据,Python和解决方案文件(单击存储库并找到tensorflow-insiderthreat) https://github.com/ dc401 / tensorflow-内部威胁

-

Optional: if you want a nice IDE for Python: Visual Studio 2019 Community Edition with the applicable Python extensions install

可选:如果您想要一个不错的Python IDE:安装有适用的Python扩展的Visual Studio 2019社区版

-

Required: Rapidminer Studio Trial (or educational license if it applies to you)

必需: Rapidminer Studio试用版 (或教育许可,如果适用于您)

-

Required: Python environment , use the Python 3.8.3 x64 bit release

必需:Python环境,使用Python 3.8.3 x64位版本

-

Required: Install python packages: (numpy, pandas, tensorflow, sklearn via “pip install <packagename>” from the command line

必需:通过命令行从“ pip install <packagename>” 安装python软件包 :(numpy,pandas, tensorflow和sklearn)

过程概述 (Overview of the Process)

It’s important for newcomers to any data science discipline to know that the majority of your time spent will be in data pre-processing and analyzing what you have which includes cleaning up the data, normalizing, extracting any additional meta insights, and then encoding the data so that it is ready for an AI solution to ingest it.

对于任何数据科学学科的新手来说,重要的是要知道您花费的大部分时间将用于数据预处理和分析所拥有的内容,其中包括清理数据,规范化,提取任何其他元数据见解,然后对数据进行编码以便为AI解决方案做好准备。

- We need to extract and process the dataset in such a way where it is structured with fields that we may need as ‘features’ which is just to be inclusive in the AI model we create. We will need to ensure all the text strings are encoded into numbers so the engine we use can ingest it. We will also have to mark which are insider threat and non-threat rows (true positives, and true negatives).

我们需要以这样一种方式提取和处理数据集,使其以我们可能需要作为“特征”的字段进行结构化,这将包含在我们创建的AI模型中。 我们将需要确保所有文本字符串都被编码为数字,以便我们使用的引擎可以接收它。 我们还必须标记哪些是内部威胁行和非威胁行(真实肯定和真实否定)。

- Next, after data pre-processing we’ll need select, setup, and create the functions we will use to create the model and create the neural network layers itself

接下来,在数据预处理之后,我们需要选择,设置和创建用于创建模型和创建神经网络层本身的函数

- Generate the model; and examine the accuracy, applicability, and identify additional modifications or tuning needed in any of part of the data pipeline

生成模型; 并检查准确性,适用性,并确定数据管道中任何部分所需的其他修改或调整

检查数据集动手和手动预处理 (Examining the Dataset Hands on and Manual Pre-Processing)

Examining the raw US-CERT data requires you to download compressed files that must be extracted. Note just how large the sets are compared to how much we will use and reduce at the end of the data pre-processing.

检查原始的US-CERT数据需要您下载必须提取的压缩文件。 请注意,与数据预处理结束时将使用和减少的集相比,集合有多大。

In our article, we saved a bunch of time by going directly to the answers.tar.bz2 that has the insiders.csv file for matching which datasets and individual extracted records of are value. Now, it is worth stating that in the index provided there has correlated record numbers in extended data such as the file, and psychometric related data. We didn’t use the extended meta in this tutorial brief because of the extra time to correlate and consolidate all of it into a single CSV in our case.

在我们的文章中,我们直接进入了具有insiders.csv文件的answer.tar.bz2,以匹配哪些数据集和单个提取的记录是有价值的,从而节省了很多时间。 现在,值得指出的是,在提供的索引中,在诸如文件的扩展数据和与心理测验相关的数据中具有相关的记录编号。 在本教程摘要中,我们没有使用扩展的meta,因为在我们的案例中,需要花费额外的时间将所有相关并整合到单个CSV中。

To see a more comprehensive set of feature sets extracted from this same data, consider checking out this research paper called “Image-Based Feature Representation for Insider Threat Classification.” We’ll be referring to that paper later in the article when we examine our model accuracy.

要查看从相同数据中提取的更全面的特征集,请考虑查看这份名为“ 基于图像的内部威胁分类特征表示 ”的研究论文。 当我们检查模型的准确性时,我们将在本文后面引用该论文。

Before getting the data encoded and ready for a function to read it; we need to get the data extracted and categorized into columns that we need to predict one. Let’s use good old Excel to insert a column into the CSV. Prior to the screenshot we took and added all the rows from the referenced datasets in “insiders.csv” for scenario 2.

在获取数据编码并准备好函数读取之前; 我们需要提取数据并将其分类为我们需要预测的列。 让我们使用良好的旧Excel在CSV中插入一列。 在截屏之前,我们将场景2的引用数据集中的所有行添加到了“ insiders.csv”中。

Insiders.csv index of true positives

Insiders.csv真实肯定指数

The scenario (2) is described in scenarios.txt: “User begins surfing job websites and soliciting employment from a competitor. Before leaving the company, they use a thumb drive (at markedly higher rates than their previous activity) to steal data.”

方案(2)在scenarios.txt中进行了描述:“用户开始浏览求职网站并从竞争对手那里寻求就业。 在离开公司之前,他们使用拇指驱动器(速度明显高于以前的活动)来窃取数据。”

Examine our pre-processed data that includes its intermediary and final forms as shown in the following below:

检查我们的预处理数据,包括其中间形式和最终形式,如下所示:

Intermediate composite true positive records for scenario 2

方案2的中间综合真实肯定记录

In the above photo, this is a snippet of all the different record types essentially appended to each other and properly sorted by date. Note that different vectors (http vs. email vs. device) do not all align easily have different contexts in the columns. This is not optimal by any means but since the insider threat scenario includes multiple event types; this is what we’ll have to work with for now. This is the usual case with data that you’ll get trying to correlate based on time and multiple events tied to a specific attribute or user like a SIEM does.

在上面的照片中,这是所有不同记录类型的摘要,这些记录类型基本上彼此附加,并按日期正确排序。 请注意,不同的向量(http,电子邮件,设备)并非都容易对齐,各列中具有不同的上下文。 这绝不是最佳方法,而是因为内部威胁场景包括多种事件类型; 这是我们现在必须使用的。 这是通常的情况,您将尝试根据时间将数据关联起来,并且将多个事件绑定到特定的属性或用户,就像SIEM一样。

Comparison of different data types that have to be consolidated

比较必须合并的不同数据类型

In the aggregation set; we combined the relevant CSV’s after moving all of the items mentioned from the insiders.csv for scenario 2 into the same folder. To formulate the entire ‘true positive’ only dataset portion; we’ve used powershell as shown below:

在聚合集中; 在将方案2的insiders.csv中提到的所有项目移至同一文件夹后,我们合并了相关的CSV。 拟定整个“真正肯定的”唯一数据集部分; 我们使用了如下所示的powershell:

Using powershell to merge the CSV’s together

使用Powershell将CSV合并在一起

Right now we have a completely imbalanced dataset where we only have true positives. We’ll also have to add true negatives and the best approach is to have an equal amount of record types representing in a 50/50 scenario of non-threat activity. This is almost never the case with security data so we’ll do what we can as you’ll find below. I also want to point out, that if you’re doing manual data processing in an OS shell — whatever you import into a variable is in memory and does not get released or garbage collected by itself as you can see from my PowerShell memory consumption after a bunch of data manipulation and CSV wrangling, I’ve bumped up my usage to 5.6 GB.

现在,我们有一个完全不平衡的数据集,其中只有真实的正数。 我们还必须添加真实的底片,最好的方法是使相同数量的记录类型代表50/50的非威胁活动。 安全数据几乎绝不是这种情况,因此我们将尽一切可能,如下所示。 我还想指出的是,如果您正在OS Shell中进行手动数据处理-导入变量中的任何内容都在内存中,并且不会被释放或自身被垃圾回收,就像您从PowerShell内存消耗之后看到的那样一堆数据处理和CSV争用,我的使用率提高到5.6 GB。

Memory isn’t released automatically. We also count the lines in each CSV file.

内存不会自动释放。 我们还计算每个CSV文件中的行数。

Let’s look at the R1 dataset files. We’ll need to pull from that we know are confirmed true negatives (non-threats) for each of the 3 types from filenames we used in the true positive dataset extracts (again, it’s from the R1 dataset which have benign events).

让我们看一下R1数据集文件。 我们需要从已知的肯定数据集中提取的文件名(同样,来自具有良性事件的R1数据集)中使用的三种文件名中,确定每种类型均为真否定(非威胁)。

We’ll merge a number of records from all 3 of the R1 true negative data sets from logon, http, and device files. Note, that in the R1 true negative set, we did not find an emails CSV which adds to the imbalance for our aggregate data set.

我们将合并来自登录,http和设备文件的所有3个R1真实否定数据集中的记录。 请注意,在R1真实否定集中,我们没有找到会增加总数据集不平衡性的电子邮件CSV。

Using PowerShell we count the length of lines in each file. Since we had about ~14K of rows from the true positive side, I arbitrarily took from the true negative side the first 4500 applicable rows from each subsequent file and appended them to the training dataset so that we have both true positives, and true negatives mixed in. We’ll have to add a column to mark which is a insider threat and which aren’t.

使用PowerShell,我们可以计算每个文件中的行长。 由于我们从真实肯定的一面开始大约有14K的行,因此我从真实否定的一面任意取了每个后续文件中的前4500个适用行,并将它们附加到训练数据集上,这样我们既有真实的肯定也有真实的否定混合in。我们必须添加一列以标记哪些是内部威胁,哪些不是。

Extracting records of true negatives from 3 complementing CSV’s

从3个互补CSV中提取真实阴性记录

In pre-processing our data we’ve already added all the records of interest below and selected various other true-negative non-threat records from the R1 dataset. Now we have our baseline of threats and non-threats concatenated in a single CSV. To the left, we’ve added a new column to denote a true/false or (1 or 0) in a find and replace scenario.

在预处理我们的数据时,我们已经在下面添加了所有感兴趣的记录,并从R1数据集中选择了其他各种真阴性非威胁记录。 现在,我们将威胁和非威胁的基准连接在单个CSV中。 在左侧,我们添加了一个新列,以表示在“查找并替换”场景中为true / false或(1或0)。

Label encoding the insider threat True/False Column

标签编码内部威胁的真/假列

Above, you can also see we started changing true/false strings to numerical categories. This is us beginning on our path to encode the data through manual pre-processing which we could save ourselves the hassle as we see in future steps in RapidMiner Studio and using the Pandas Dataframe library in Python for Tensorflow. We just wanted to illustrate some of the steps and considerations you’ll have to perform. Following this, we will continue processing our data for a bit. Let’s highlight what we can do using excel functions before going the fully automated route.

在上方,您还可以看到我们开始将true / false字符串更改为数字类别。 这是我们开始通过手动预处理对数据进行编码的方法,这可以节省我们自己,避免我们在RapidMiner Studio的后续步骤中看到的麻烦,并且可以将Python中的Pandas Dataframe库用于Tensorflow。 我们只是想说明您必须执行的一些步骤和注意事项。 之后,我们将继续处理数据。 让我们重点介绍在采用全自动方法之前可以使用excel函数进行的操作。

Calculating Unix Epoch Time from the Date and Time Provided

根据提供的日期和时间计算Unix时代时间

We’re also manually going to convert the date field into Unix Epoch Time for the sake of demonstration and as you seen it becomes a large integer with a new column. To remove the old column in excel for rename, create a new sheet such as ‘scratch’ and cut the old date (non epoch timestamp) values into that sheet. Reference the sheet along with the formula you see in the cell to achieve this effect. This formula is: “=(C2-DATE(1970,1,1))*86400” without quotes.

为了进行演示,我们还将手动将date字段转换为Unix Epoch Time ,如您所见,它变成带有新列的大整数。 要删除excel中的旧列以进行重命名,请创建一个新的工作表,例如“ scratch”,然后将旧的日期(非纪元时间戳)值剪切到该工作表中。 请参考工作表以及您在单元格中看到的公式以实现此效果。 该公式为:“ =(C2-DATE(1970,1,1))* 86400 ”,不带引号。

Encoding the vector column and feature set column map

编码向量列和特征集列映射

In our last manual pre-processing work example you need to format the CSV in is to ‘categorize’ by label encoding the data. You can automate this as one-hot encoding methods via a data dictionary in a script or in our case we show you the manual method of mapping this in excel since we have a finite set of vectors of the records of interest (http is 0, email is 1, and device is 2).

在我们的最后一个手动预处理工作示例中,您需要格式化CSV,以便通过对数据进行标签编码来“分类”。 您可以通过脚本中的数据字典将其作为一次性编码方法自动执行,在本例中,由于我们有一组感兴趣的记录向量(http为0,电子邮件为1,设备为2)。

You’ll notice that we have not done the user, source, or action columns as it has a very large number of unique values that need label encoding and it’s just impractical by hand. We were able to accomplish this without all the manual wrangling above using the ‘turbo prep’ feature of RapidMiner Studio and likewise for the remaining columns via Python’s Panda in our script snippet below. Don’t worry about this for now, we will show case the steps in each different AI tool and up doing the same thing the easy way.

您会注意到,我们没有完成user,source或action列,因为它具有大量需要标签编码的唯一值,并且手工操作是不切实际的。 我们可以使用RapidMiner Studio的“ turbo prep”功能完成上面的所有手动操作,而无需为下面的脚本片段中通过Python的Panda编写的其余列完成所有操作。 暂时不必担心,我们将向您展示每种不同的AI工具中的步骤,并以简单的方式完成相同的操作。

#print(pd.unique(dataframe[\'user\']))

#https://pbpython.com/categorical-encoding.html

dataframe[\"user\"] = dataframe[\"user\"].astype(\'category\')

dataframe[\"source\"] = dataframe[\"source\"].astype(\'category\')

dataframe[\"action\"] = dataframe[\"action\"].astype(\'category\')

dataframe[\"user_cat\"] = dataframe[\"user\"].cat.codes

dataframe[\"source_cat\"] = dataframe[\"source\"].cat.codes

dataframe[\"action_cat\"] = dataframe[\"action\"].cat.codes

#print(dataframe.info())

#print(dataframe.head())

#save dataframe with new columns for future datmapping

dataframe.to_csv(\'dataframe-export-allcolumns.csv\')

#remove old columns

del dataframe[\"user\"]

del dataframe[\"source\"]

del dataframe[\"action\"]

#restore original names of columns

dataframe.rename(columns={\"user_cat\": \"user\", \"source_cat\": \"source\", \"action_cat\": \"action\"}, inplace=True)

The above snippet is the using python’s panda library example of manipulating and label encoding the columns into numerical values unique to each string value in the original data set. Try not to get caught up in this yet. We’re going to show you the easy and comprehensive approach of all this data science work in Rapidminer Studio

上面的代码段是使用python的panda库示例,该示例对列进行处理和标签编码为原始数据集中每个字符串值唯一的数值。 尽量不要陷入困境。 我们将向您展示Rapidminer Studio中所有这些数据科学工作的简单而全面的方法

Important step for defenders: Given that we’re using the pre-simulated dataset that has been formatted from US-CERT, not every SOC is going to have access to the same uniform data for their own security events. Many times your SOC will have only raw logs to export. From an ROI perspective — before pursuing your own DIY project like this, consider the level of effort and if you can automate exporting meta of your logs into a CSV format, an enterprise solution as Splunk or another SIEM might be able to do this for you. You would have to correlate your events and add as many columns as possible for enriched data formatting. You would also have to examine how consistent and how you can automate exporting this data in a format that US-CERT has to use similar methods for pre-processing or ingestion. Make use of your SIEM’s API features to export reports into a CSV format whenever possible.

对于防御者而言重要的一步:鉴于我们使用的是从US-CERT格式化的预先模拟的数据集,因此并非每个SOC都可以为自己的安全事件访问相同的统一数据。 很多时候,您的SOC只能导出原始日志。 从投资回报率的角度来看,在追求自己的DIY项目之前,请考虑一下工作水平,如果可以将日志的元数据自动导出为CSV格式,则Splunk或其他SIEM的企业解决方案可能会为您完成此任务。 您将必须关联事件并添加尽可能多的列以丰富数据格式。 您还必须检查一致性,以及如何以US-CERT必须使用类似方法进行预处理或提取的格式自动导出此数据。 尽可能利用SIEM的API功能将报告导出为CSV格式。

使用我们的数据集浏览RapidMiner Studio (Walking through RapidMiner Studio with our Dataset)

It’s time use to some GUI based and streamlined approaches. The desktop edition of RapidMiner is Studio and the latest editions as of 9.6.x have turbo prep and auto modeling built in as part of your workflows. Since we’re not domain experts, we are definitely going to take advantage of using this. Let’s dig right in.

现在是时候使用一些基于GUI的简化方法了。 RapidMiner的桌面版本为Studio,而自9.6.x版起的最新版本在工作流程中内置了Turbo预处理和自动建模功能。 由于我们不是领域专家,因此我们肯定会利用它。 让我们继续深入。

Note: If your trial expired before getting to this tutorial and use community edition, you will be limited to 10,000 rows. Further pre-processing is required to limit your datasets to 5K of true positives, and 5K of true negatives including the header. If applicable, use an educational license which is unlimited and renewable each year that you enrolled in a qualifying institution with a .edu email.

注意:如果在进入本教程并使用社区版本之前试用版已过期,那么您将被限制为10,000行。 需要进一步的预处理,以将您的数据集限制为5K的真实正数和5K的真实负数(包括标题)。 如果适用,您每年使用合格的教育机构(.edu)电子邮件使用教育许可证 ,该许可证是无限的并且可以续签。

RapidMiner Studio

RapidMiner Studio

Upon starting we’re going to start a new project and utilize the Turbo Prep feature. You can use other methods or the manual way of selecting operators via the GUI in the bottom left for the community edition. However, we’re going to use the enterprise trial because it’s easy to walk through for first-time users.

开始后,我们将开始一个新项目并利用Turbo Prep功能。 您可以使用其他方法或通过社区版左下角的GUI通过手动方式选择操作员。 但是,我们将使用企业试用版,因为对于初次使用的用户来说很容易完成。

Import the Non-Processed CSV Aggregate File

导入未处理的CSV聚合文件

We’ll import our aggregate CSV of true positive only data non-processed; and also remove the first row headers and use our own because the original row relates to the HTTP vector and does not apply to subsequent USB device connection and Email related records also in the dataset as shown below.

我们将导入未处理的仅真实正数数据的CSV总和; 并删除第一行标题并使用我们自己的标题,因为原始行与HTTP向量相关,并且不适用于数据集中的后续USB设备连接和与电子邮件相关的记录,如下所示。

Note: Unlike our pre-processing steps which includes label encodings and reduction, we did not do this yet on RapidMiner Studio to show the full extent of what we can easily do in the ‘turbo prep’ feature. We’re going to enable the use of quotes as well and leave the other defaults for proper string escapes.

注意:与我们的预处理步骤(包括标签编码和缩减)不同,我们尚未在RapidMiner Studio上进行此操作,以显示我们可以轻松地在“涡轮准备”功能中进行的所有操作。 我们还将启用引号,并保留其他默认值以进行正确的字符串转义。

Next, we set our column header types to their appropriate data types.

接下来,我们将列标题类型设置为其适当的数据类型。

Stepping through the wizard we arrive at the turbo prep tab for review and it shows us distribution and any errors such as missing values that need to be adjusted and which columns might be problematic. Let’s start with making sure we identify all of these true positives as insider threats to begin with. Click on generate and we’re going to transform this dataset by inserting a new column in all the rows with a logical ‘true’ statement like so below

逐步完成向导后,我们进入涡轮备选项卡进行审查,它向我们显示了分布以及任何错误,例如需要调整的缺少值以及哪些列可能有问题。 让我们首先确保将所有这些真正的积极因素识别为一开始的内部威胁。 单击generate,我们将通过在所有行中插入带有逻辑“ true”语句的新列来转换此数据集,如下所示

We’ll save the column details and export it for further processing later or we’ll use it as a base template set for when we begin to pre-process for the Tensorflow method following this to make things a little easier.

我们将保存列详细信息并导出以供以后进一步处理,或者将其用作基础模板集,以便在此之后开始对Tensorflow方法进行预处理时使事情变得容易一些。

After the export as you can see above, don’t forget we need to balance the data with true negatives. We’ll repeat the same process of importing the true negatives. Now we should see multiple datasets in our turbo prep screen.

如上所示,导出后,请不要忘记我们需要平衡真实负数的数据。 我们将重复导入真实负片的相同过程。 现在,我们应该在我们的turbo准备屏幕中看到多个数据集。

In the above, even though we’ve only imported 2 datasets, remember transformed the true positive by adding a column called insiderthreat which is a true/false boolean logic. We do the same with true negatives and you’ll eventually end up with 4 of these listings.

在上面,即使我们仅导入了2个数据集,也请记住通过添加一列称为innerrthreat的列来转换真正值,这是一个真/假布尔逻辑。 我们对真否定词也做同样的事情,您最终将得到其中的4个清单。

We’ll need to merge the true positives and true negatives into a ‘training set’ before we get to do anything fun with it. But first, we also need to drop columns that we don’t think are relevant our useful such as the transaction ID and the description column of the website keywords scraped as none of the other row data have these; and and would contain a bunch of empty (null) values that aren’t useful for calculation weights.

在进行任何有趣的操作之前,我们需要将真实的肯定因素和真实的否定因素合并为一个“训练集”。 但是首先,我们还需要删除一些我们认为不相关的列,例如,由于其他行数据都没有这些列而导致抓取的网站关键字的交易ID和描述列; 并且将包含一堆空(空)值,这些值对计算权重没有用。

Important thought: As we’ve mentioned regarding other research papers, choosing columns for calculation aka ‘feature sets’ that include complex strings have to be tokenized using natural language processing (NLP). This adds to your pre-processing requirements in additional to label encoding in which in the Tensorflow + Pandas Python method would usually require wrangling multiple data frames and merging them together based on column keys for each record. While this is automated for you in RapidMiner, in Tensorflow you’ll have to include this in your pre-processing script. More documentation about this can be found here.

重要思想:正如我们在其他研究论文中提到的那样,必须使用自然语言处理(NLP)对包含复杂字符串的计算列(也称为“功能集”)进行选择。 除了标签编码外,这还增加了预处理要求,在Tensorflow + Pandas Python方法中,标签编码通常需要整理多个数据框,并根据每个记录的列键将它们合并在一起。 虽然这在RapidMiner中自动为您提供,但在Tensorflow中,您必须将其包括在预处理脚本中。 关于此的更多文档可以在这里找到。

Take note that we did not do this in our datasets because you’ll see much later in an optimized RapidMiner Studio recommendation that heavier weight and emphasis on the date and time are were more efficient feature sets with less complexity. You on other hand on different datasets and applications may need to NLP for sentiment analysis to add to the insider threat modeling.

请注意,我们没有在数据集中执行此操作,因为稍后您会在优化的RapidMiner Studio建议中看到,更重的功能和对日期和时间的强调是更有效的功能集,且复杂度更低。 另一方面,您在不同的数据集和应用程序上可能需要NLP进行情感分析,以添加到内部威胁建模中。

Finishing your training set: Although we do not illustrate this, after you have imported both true negatives and true positives within the Turbo prep menu click on the “merge” button and select both transformed datasets and select the “Append” option since both have been pre-sorted by date.

完成训练集:尽管我们没有说明,但是在Turbo prep菜单中同时导入了真阴性和真阳性之后,请单击“合并”按钮并选择两个转换后的数据集,然后选择“附加”选项,因为这两个都已完成。按日期预先排序。

继续使用自动模型功能 (Continue to the Auto Model feature)

Within RapidMiner Studio we continue to the ‘Auto Model’ tab and utilize our selected aggregate ‘training’ data (remember training data includes true positives and true negatives) to predict on the insiderthreat column (true or false)

在RapidMiner Studio中,我们继续进入“自动模型”选项卡,并利用我们选择的汇总“训练”数据(记住训练数据包括真实的肯定和真实的否定)来预测内线威胁栏(正确或错误)

We also notice what our actual balance is. We are still imbalanced with only 9,001 records of non-threats vs. threats of ~14K. It’s imbalanced and that can always be padded with additional records should you choose. For now, we’ll live with it and see what we can accomplish with not-so-perfect data.

我们还注意到实际余额是多少。 我们仍然没有平衡,只有9,001条非威胁记录和约14K威胁记录。 它是不平衡的,并且可以随时添加其他记录(如果您选择)。 现在,我们将继续使用它,看看用不那么完美的数据可以完成什么。

Here the auto modeler recommends different feature columns in green and yellow and their respective correlation. The interesting thing is that it is estimating date is of high correlation but less stability than action and vector.

在这里,自动建模器建议使用绿色和黄色的不同特征列以及它们各自的相关性。 有趣的是,它估计日期与动作和向量相比具有较高的相关性,但稳定性较差。

Important thought: In our head, we would think as defenders all of the feature set applies in each column as we’ve already reduced what we could as far as relevance and complexities. It’s also worth mentioning that this is based off a single event. Remember insider threats often take multiple events as we saw in the answers portion of the insiders.csv . What the green indicators are showing us are unique record single event identification.

重要思想:我们认为,作为捍卫者,所有功能集都适用于每一列,因为我们已经尽可能减少了相关性和复杂性。 还值得一提的是,这是基于单个事件。 请记住,正如我们在Insiders.csv的答案部分中所看到的那样,内部威胁通常会发生多个事件。 绿色指示器向我们显示的是唯一记录单事件标识。

We’re going to use all the columns anyways because we think it’s all relevant columns to use. We also move to the next screen on model types, and because we’re not domain experts we’re going to try almost all of them and we want the computer to re-run each model multiple times finding the optimized set of inputs and feature columns.

我们仍然要使用所有列,因为我们认为这是所有要使用的相关列。 我们还将转到模型类型的下一个屏幕,因为我们不是领域专家,所以我们将尝试几乎所有模型,并且希望计算机重新运行每个模型多次,以找到优化的输入和功能集。列。

Remember that feature sets can include meta information based on insights from existing columns. We leave the default values of tokenization and we want to extract date and text information. Obviously the items with the free-form text are the ‘Action’ column with all the different URLs, and event activity that we want NLP to be applied. And we want to correlate between columns, the importance of columns, and explain predictions as well.

请记住,功能集可以包含基于现有列的见解的元信息。 我们保留标记化的默认值,并且要提取日期和文本信息。 显然,带有自由格式文本的项目是“操作”列,其中包含所有不同的URL,以及我们希望应用NLP的事件活动。 我们希望将各列之间的相关性,各列的重要性相关联,并解释一些预测。

Note that in the above we’ve pretty much selected bunch of heavy processing parameters in our batch job. On an 8 core single threadded processor running Windows 10, 24 GB memory and a GPU of a Radeon RX570 value series with SSD’s all of these models took about 6 hours to run total with all the options set. After everything was completed we have 8000+ models and 2600+ feature set combinations tested in our screen comparison.

请注意,在上文中,我们在批处理作业中选择了许多繁重的处理参数。 在运行Windows 10的8核单线程处理器,24 GB内存和带有SSD的Radeon RX570值系列的GPU上,所有这些型号的所有选项集总共花费了大约6个小时来运行。 一切完成后,我们在屏幕比较中测试了8000多个模型和2600多个功能集组合。

According to RapidMiner Studio; the deep learning neural network methods aren’t the best ROI fit; compared to the linear general model. There are no errors though- and that’s worrisome which might mean that we have poor quality data or an overfit issue with the model. Let’s take a look at deep learning as it also states a potential 100% accuracy just to compare it.

根据RapidMiner Studio的说法; 深度学习神经网络方法并非最适合ROI的方法; 与线性一般模型相比 尽管没有错误-但这令人担忧,这可能意味着我们的数据质量很差或模型存在过拟合问题。 让我们看一下深度学习,因为它也指出了潜在的100%准确性,只是为了进行比较。

In the Deep Learning above it’s tested against 187 different combinations of feature sets and the optimized model shows that unlike our own thoughts as to what features would be good including the vector and action mostly. We see even more weight put on the tokens in Action interesting words and the dates. Surprisingly; we did not see anything related to “email” or the word “device” in the actions as part of the optimized model.

在上面的深度学习中,它针对187种不同的特征集组合进行了测试,优化的模型显示出与我们自己的想法不同的是,关于哪些特征最好是矢量(包括矢量和动作)将是好的。 我们看到,Action有趣的单词和日期赋予了令牌更多的权重。 出奇; 作为优化模型的一部分,我们没有在操作中看到与“电子邮件”或“设备”一词相关的任何内容。

Not to worry, as this doesn’t mean we’re dead wrong. It just means the feature sets it selected in its training (columns and extracted meta columns) provided less errors in the training set. This could be that we don’t have enough diverse or high quality data in our set. In the previous screen above you saw an orange circle and a translucent square.

不用担心,因为这并不意味着我们就错了。 这只是意味着在训练中选择的功能集(列和提取的元列)在训练集中提供的错误较少。 这可能是因为我们的集合中没有足够的多样化或高质量数据。 在上面的上一个屏幕中,您看到了一个橙色的圆圈和一个半透明的正方形。

The orange circle indicates the models suggested optimizer function and the square is our original selected feature set. If you examine the scale, our human selected feature set was an error rate of 0.9 and 1% which gives our accuracy closer to the 99% mark; but only at a much higher complexity model (more layers and connections in the neural net required) That makes me feel a little better and just goes to show you that caution is needed when interpreting all of these at face value.

橙色圆圈表示模型建议的优化器功能,而正方形是我们最初选择的功能集。 如果您检查秤,我们人工选择的功能集的错误率是0.9和1%,这使我们的准确性接近99%标记; 但是只有在复杂度更高的模型(需要神经网络中更多的层和连接)的情况下,我才会感觉更好,并且只是向您展示在解释所有这些具有表面价值时需要谨慎行事。

调整注意事项 (Tuning Considerations)

Let’s say you don’t fully trust such a highly “100% accurate model”. We can try to re-run it using our feature sets in vanilla manner as a pure token label. We’re *not* going extract date information, no text tokenization via NLP and we don’t want it to automatically create new feature set meta based on our original selections. Basically, we’re going to use a plain vanilla set of columns for the calculations.

假设您不完全相信这种高度“ 100%准确的模型”。 我们可以尝试使用功能集以纯令牌标记的方式重新运行它。 我们不是要提取日期信息,也不是通过NLP进行文本标记化,我们不希望它根据我们的原始选择自动创建新的功能集元。 基本上,我们将使用一组普通的普通列进行计算。

So in the above let’s re-run it looking at 3 different models including the original best fit model and the deep learning we absolutely no optimization and additional NLP applied. So it’s as if we only used encoded label values only in the calculations and not much else.

因此,在上面让我们重新运行它,看一下3种不同的模型,包括原始的最佳拟合模型和深度学习,我们绝对没有优化,也没有应用其他NLP。 这就好比我们仅在计算中使用编码的标签值,而在其他计算中使用的则不多。

In the above, we get even worse results with an error rate of 39% is a 61% accuracy across pretty much all the models. Our selection and lack of complexity without using text token extraction is so reduced that even a more “primitive” Bayesian model (commonly used in basic email spam filter engines) seems to be just as accurate and has a fast compute time. This all looks bad but let’s dig a little deeper:

在上面的代码中,我们得到的结果甚至更糟,几乎在所有模型中,错误率均为39%,准确度均为61%。 我们的选择和缺乏复杂性而无需使用文本令牌提取的方法减少了,以至于即使是更为“原始的”贝叶斯模型(通常在基本的电子邮件垃圾邮件过滤器引擎中使用)也同样准确且具有快速的计算时间。 这一切看起来都很糟糕,但让我们更深入地研究一下:

When we select the details of the deep learning model again we see the accuracy climb in linear fashion as more of the training set population is discovered and validated against. From an interpretation stand point this shows us a few things:

当我们再次选择深度学习模型的细节时,随着发现并验证了更多的训练集总体,我们看到了线性增长的准确性。 从解释的角度来看,这向我们展示了一些东西:

- Our original primitive thoughts of feature sets of focusing on the vector and action frequency using only unique encoded values is about as only as good as a toss-up probability of an analyst finding a threat in the data. On the surface it appears that we have at best a 10% gain of increasing our chances of detecting an insider threat.

我们最初对特征集的原始想法是,仅使用唯一的编码值来关注矢量和动作频率,这与分析师发现数据中的威胁的概率差不多。 从表面上看,我们最多可以将发现内部威胁的机会提高10%。

- It also shows that even though action and vector were first thought of ‘green’ for unique record events for a better input selection was actually the opposite for insider threat scenarios that we need to think about multiple events for each incident/alert. In the optimized model many of the weights and tokens used were time correlated specific and action token words

它还表明,即使对于唯一的记录事件而言,行动和引导程序最初都是“绿色”的,以便进行更好的输入选择,但对于内部威胁场景而言,实际上是相反的,对于每个事件/警报,我们都需要考虑多个事件。 在优化的模型中,使用的许多权重和令牌是与时间相关的特定和动作令牌字

-

This also tells us that our base data quality for this set is rather low and we would need additional context and possibly sentiment analysis of each user for each unique event which is also an inclusive HR data metric ‘OCEAN’ in the psychometric.csv file. Using tokens through NLP; we would possibly tune to include the column of mixture of nulls to include the website descriptor words from the original data sets and maybe the files.csv that would have to merged into our training set based on time and transaction ID as keys when performing those joins in our data pre-processing

这也告诉我们,此集合的基本数据质量相当低,我们需要针对每个唯一事件进行每个用户的其他上下文分析以及可能的情感分析,这也是psychometric.csv文件中包含的HR数据指标“ OCEAN ”。 通过NLP使用令牌; 我们可能会调整为包含空值混合的列,以包含原始数据集中的网站描述符词,甚至可能包含files.csv,在执行这些连接时,这些文件必须基于时间和交易ID作为键合并到我们的训练集中在我们的数据预处理中

优化部署模型(或不优化) (Deploying your model optimized (or not))

While this section does not show screenshots, the last step in the RapidMiner studio is to deploy the optimized or non-optimized model of your choosing. Deploying locally in the context of studio won’t do much for you other than to re-use a model that you really like and to load new data through the interactions of the Studio application. You would need RapidMiner Server to make local or remote deployments automated to integrate with production applications. We do not illustrate such steps here, but there is great documentation on their site at: https://docs.rapidminer.com/latest/studio/guided/deployments/

尽管此部分没有显示屏幕截图,但是RapidMiner Studio的最后一步是部署您选择的优化或未优化模型。 除了重新使用您真正喜欢的模型并通过Studio应用程序的交互加载新数据之外,在studio上下文中进行本地部署对您没有多大帮助。 您将需要RapidMiner Server使本地或远程部署自动化以与生产应用程序集成。 我们在这里没有说明这些步骤,但是在他们的网站上有很棒的文档: https : //docs.rapidminer.com/latest/studio/guided/deployments/

但是Tensorflow 2.0和Keras呢? (But what about Tensorflow 2.0 and Keras?)

Maybe RapidMiner Studio wasn’t for us and everyone talks about Tensorflow (TF) as one of the leading solutions. But, TF does not have a GUI. The new TF v2.0 has Keras API part of the installation which makes interaction in creating the neural net layers much easier along getting your data ingested from Python’s Panda Data Frame into model execution. Let’s get started.

也许RapidMiner Studio不适合我们,每个人都在谈论Tensorflow(TF)作为领先的解决方案之一。 但是,TF没有GUI。 新的TF v2.0具有安装的Keras API一部分,这使得创建神经网络层的交互变得更加容易,同时还可以使您从Python的Panda Data Frame提取的数据进入模型执行。 让我们开始吧。

As you recall from our manual steps we start data pre-processing. We re-use the same scenario 2 and data set and will use basic label encoding like we did with our non-optimized model in RapidMiner Studio to show you the comparison in methods and the fact the it’s all statistics at the end of the day based on algorithmic functions converted into libraries. Reusing the screenshot, remember that we did some manual pre-processing work and converted the insiderthreat, vector, and date columns into category numerical values already like so below:

您从我们的手动步骤中回忆起,我们开始进行数据预处理。 我们将重复使用相同的场景2和数据集,并将使用基本标签编码,就像在RapidMiner Studio中对非优化模型所做的那样,向您展示方法的比较,并基于一天结束时的全部统计数据关于算法函数的转换成库。 重用屏幕截图,请记住我们做了一些手动的预处理工作,并将Insiderthreat,vector和date列转换为类别数字值,如下所示:

I’ve placed a copy of the semi-scrubbed data on the Github if you wish to review the intermediate dataset prior to us running Python script to pre-process further:

如果您希望在运行Python脚本进行进一步预处理之前查看中间数据集 ,则已在Github上放置了半清理数据的副本:

Let’s examine the python code to help us get to the final state we want which is:

让我们检查一下python代码,以帮助我们达到所需的最终状态:

The code can be copied below:

该代码可以复制如下:

import numpy as np

import pandas as pd

import tensorflow as tf

from tensorflow import feature_column

from tensorflow.keras import layers

from sklearn.model_selection import train_test_split

from pandas.api.types import CategoricalDtype

#Use Pandas to create a dataframe

#In windows to get file from path other than same run directory see:

#https://stackoverflow.com/questions/16952632/read-a-csv-into-pandas-from-f-drive-on-windows-7

URL = \'https://raw.githubusercontent.com/dc401/tensorflow-insiderthreat/master/scenario2-training-dataset-transformed-tf.csv\'

dataframe = pd.read_csv(URL)

#print(dataframe.head())

#show dataframe details for column types

#print(dataframe.info())

#print(pd.unique(dataframe[\'user\']))

#https://pbpython.com/categorical-encoding.html

dataframe[\"user\"] = dataframe[\"user\"].astype(\'category\')

dataframe[\"source\"] = dataframe[\"source\"].astype(\'category\')

dataframe[\"action\"] = dataframe[\"action\"].astype(\'category\')

dataframe[\"user_cat\"] = dataframe[\"user\"].cat.codes

dataframe[\"source_cat\"] = dataframe[\"source\"].cat.codes

dataframe[\"action_cat\"] = dataframe[\"action\"].cat.codes

#print(dataframe.info())

#print(dataframe.head())

#save dataframe with new columns for future datmapping

dataframe.to_csv(\'dataframe-export-allcolumns.csv\')

#remove old columns

del dataframe[\"user\"]

del dataframe[\"source\"]

del dataframe[\"action\"]

#restore original names of columns

dataframe.rename(columns={\"user_cat\": \"user\", \"source_cat\": \"source\", \"action_cat\": \"action\"}, inplace=True)

print(dataframe.head())

print(dataframe.info())

#save dataframe cleaned up

dataframe.to_csv(\'dataframe-export-int-cleaned.csv\')

#Split the dataframe into train, validation, and test

train, test = train_test_split(dataframe, test_size=0.2)

train, val = train_test_split(train, test_size=0.2)

print(len(train), \'train examples\')

print(len(val), \'validation examples\')

print(len(test), \'test examples\')

#Create an input pipeline using tf.data

# A utility method to create a tf.data dataset from a Pandas Dataframe

def df_to_dataset(dataframe, shuffle=True, batch_size=32):

dataframe = dataframe.copy()

labels = dataframe.pop(\'insiderthreat\')

ds = tf.data.Dataset.from_tensor_slices((dict(dataframe), labels))

if shuffle:

ds = ds.shuffle(buffer_size=len(dataframe))

ds = ds.batch(batch_size)

return ds

#choose columns needed for calculations (features)

feature_columns = []

for header in [\"vector\", \"date\", \"user\", \"source\", \"action\"]:

feature_columns.append(feature_column.numeric_column(header))

#create feature layer

feature_layer = tf.keras.layers.DenseFeatures(feature_columns)

#set batch size pipeline

batch_size = 32

train_ds = df_to_dataset(train, batch_size=batch_size)

val_ds = df_to_dataset(val, shuffle=False, batch_size=batch_size)

test_ds = df_to_dataset(test, shuffle=False, batch_size=batch_size)

#create compile and train model

model = tf.keras.Sequential([

feature_layer,

layers.Dense(128, activation=\'relu\'),

layers.Dense(128, activation=\'relu\'),

layers.Dense(1)

])

model.compile(optimizer=\'adam\',

loss=tf.keras.losses.BinaryCrossentropy(from_logits=True),

metrics=[\'accuracy\'])

model.fit(train_ds,

validation_data=val_ds,

epochs=5)

loss, accuracy = model.evaluate(test_ds)

print(\"Accuracy\", accuracy)

In our scenario we’re going to ingest the data from Github. I’ve included in the comment the method of using the os import to do so from a file local to your disk. One thing to point out is that we use the Pandas dataframe construct and methods to manipulate the columns using label encoding for the input. Note that this is not the optimized manner as which RapidMiner Studio reported to us.

在我们的场景中,我们将从Github提取数据。 我在注释中包括了使用os import从磁盘本地文件执行此操作的方法。 要指出的一件事是,我们使用Pandas数据框构造和方法对输入使用标签编码来操纵列。 请注意,这不是RapidMiner Studio向我们报告的优化方式。

We’re still using our same feature set columns in the second round of modeling we re-ran in the previous screens; but this time in Tensorflow for method demonstration.

在先前屏幕中重新运行的第二轮建模中,我们仍然使用相同的功能集列。 但这一次在Tensorflow中进行方法演示。

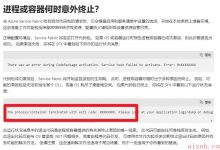

Note in the above there is an error in how vector still shows ‘object’ in the DType. I was pulling my hair out looking and I found I needed to update the dataset as I did not capture all the values into the vector column as a category numerical like I originally thought. Apparently, I was missing one. Once this was all corrected and errors gone, the model training was ran without a problem.

请注意,在上面的代码中,矢量仍然如何显示“对象”时存在错误。 我拉了一下头发,发现我需要更新数据集,因为我没有像我最初想象的那样将所有值作为类别数字捕获到向量列中。 显然,我想念一个。 一旦纠正了所有错误并消除了错误,就可以顺利进行模型训练。

Unlike RapidMiner Studio, we don’t just have one large training set and let the system do it for us. We must divide the training set into smaller pieces that must be ran through a batch based on the following as a subset for the model to be trained using known correct data of true/false insider threats and a reserved portion that is split which is the remaining being validation only.

与RapidMiner Studio不同的是,我们不仅拥有一套大型培训课程,而且让系统为我们完成。 我们必须将训练集分成多个小部分,这些小部分必须根据以下内容进行批量处理,作为要使用真/假内部威胁的已知正确数据和保留部分(剩下的部分)进行训练的模型的子集仅作为验证。

Next we need to choose our feature columns, which again is the ‘non optimized’ columns of our 5 columns of data encoded. We use a sampling batch size of 32 in each round of validation (epoch) for the pipeline as we define it early on.

接下来,我们需要选择功能列,这也是我们5列编码数据中的“非优化”列。 正如我们早先定义的那样,我们在管道的每一轮验证(时期)中使用32个抽样批次大小。

Keep note that we did not execute anything related to the tensor or even create a model yet. This is all just data prep and building the ‘pipeline’ that feeds the tensor. Below is when we create the model using the layers in sequential format using Keras, we compile the model using Google TF’s tutorial demo optimizer and loss functions with an emphasis on accuracy. We try to fit and validate the model with 5 rounds and then print the display.

请注意,我们尚未执行任何与张量相关的操作,甚至尚未创建模型。 这仅仅是数据准备,并构建了馈送张量的“管道”。 下面是当我们使用Keras使用顺序格式的图层创建模型时,我们使用Google TF的教程演示优化器和损失函数(着重精度)来编译模型。 我们尝试通过5轮拟合和验证模型,然后打印显示。

Welcome to the remaining 10% of your journey in applying AI to your insider threat data set!

欢迎来到将AI应用于内部威胁数据集的旅程的剩余10%!

Let’s run it again and well — now we see accuracy of around 61% like last time! So again, this just proves that a majority of your outcome will be in the data science process itself and the quality surrounding the pre-processing, tuning, and data. Not so much about which core software solution you go with. Without the optimizing and testing multiple model experimenting simulating in varying feature sets; our primitive models will only be at best 10% better than random chance sampling that a human analyst may or may not catch reviewing the same data.

让我们再次好好运行它-现在我们看到的准确性与上次相比大约为61%! 因此,这再次证明,您的大部分结果将来自数据科学过程本身以及预处理,调整和数据的质量。 与您使用哪种核心软件解决方案无关。 没有优化和测试在不同功能集中模拟的多模型实验; 我们的原始模型最多只能比人类分析人员可能会或可能不会查看相同数据的随机机会抽样好10%。

网络安全中AI的投资回报率在哪里 (Where is the ROI for AI in Cyber Security)

For simple project tasks that can be accomplished on individual events as an alert vs. an incident using non-domain experts; AI enabled defenders through SOC’s or threat hunting can better achieve ROI faster on things that are considered anomalous or not using baseline data. Examples include anomalies user agent strings that may show C2 infections, or K-means or KNN clustering based on cyber threat intelligence IOC’s that may show specific APT similarities.

对于简单的项目任务,可以使用非领域专家在单个事件上完成警报或事件; 通过SOC或威胁搜寻实现AI的防御者可以更好地更快地在被认为异常或不使用基准数据的事物上实现ROI。 示例包括可能显示C2感染的异常用户代理字符串,或基于网络威胁情报IOC的K均值或KNN聚类,这些IOC可能显示出特定的APT相似性。

There’s some great curated lists found on Github that may give your team ideas on what else they can pursue with some simple methods as we’ve demonstrated in this article. Whatever software solution you elect to use, chances are that our alert payloads really need NLP applied and an appropriately sized neural network created to engage in more accurate modeling. Feel free to modify our base python template and try it out yourself.

在Github上可以找到一些很棒的精选清单,这些清单可以为您的团队提供一些思路,如他们在本文中已演示的那样,他们还可以通过一些简单的方法来追求什么。 无论您选择使用哪种软件解决方案,都有可能我们的警报有效负载确实需要应用NLP,并创建适当大小的神经网络以进行更准确的建模。 随意修改我们的基本python模板,然后自己尝试。

与其他现实世界的网络用例相比,准确率约60% (Comparing the ~60% accuracy vs. other real-world cyber use cases)

I have to admit, I was pretty disappointed in myself at first; even if we knew this was not a tuned model with the labels and input selection I had. But when we cross compare it with other more complex datasets and models in the communities such as Kaggle: It really isn’t as bad as we first thought.

我不得不承认,起初我对自己感到非常失望。 即使我们知道这不是我所拥有的带有标签和输入选择的优化模型。 但是,当我们将其与社区中其他更复杂的数据集和模型(例如Kaggle)进行交叉比较时,它确实没有我们最初想象的那么糟糕。

Microsoft hosted a malware detection competition to the community and provided enriched datasets. The competition highest scores show 67% prediction accuracy and this was in 2019 with over 2400 teams competing. One member shared their code which had a 63% score and was released free and to the public as a great template if you wanted to investigate further. He titles LightGBM.

微软为社区举办了一次恶意软件检测竞赛,并提供了丰富的数据集。 比赛的最高分数显示了67%的预测准确度,这是在2019年,共有2400支球队参加比赛。 如果您想进一步调查,一个成员分享了他们的代码,该代码的得分为63%,可以作为很好的模板免费发布给公众。 他的头衔是LightGBM 。

Compared to the leaderboard points the public facing solution was only 5% “worse.” Is a 5% difference a huge amount in the world of data science? Yes (though it depends also how you measure confidence levels). So out of 2400+ teams, the best model achieved a success accuracy of ~68%. But from a budgeting ROI stand point when a CISO asks for their next FY’s CAPEX — 68% isn’t going to cut it for most security programs.

与排行榜的得分相比,面向公众的解决方案只有5%的“差”。 5%的差异在数据科学世界中是一个巨大的数目吗? 是的(尽管这还取决于您如何测量置信度)。 因此,在2400多个团队中,最好的模型获得了约68%的成功准确率。 但是从预算投资回报率的角度来看,当CISO要求其下一个财政年度的CAPEX时,对于大多数安全计划而言,68%的企业不会削减它。

While somewhat discouraging, it’s important to remember that there are dedicated data science and dev ops professionals that spend their entire careers doing this to get models u to the 95% or better range. To achieve this, tons of model testing, additional data, and additional featureset extraction is required (as we saw in RapidMiner Studio doing this automatically for us).

尽管有些令人气disc,但重要的是要记住,有专门的数据科学和开发专业人员在整个职业生涯中都在这样做,以使模型达到95%或更高的范围。 为此,需要进行大量的模型测试,其他数据和其他功能集提取(正如我们在RapidMiner Studio中所看到的那样,我们会自动这样做)。

从这里将AI应用于内部威胁中,我们从何而来? (Where do we go from here for applying AI to insider threats?)

Obviously, this is a complex task. Researchers at the Deakin University in published a paper called “Image-Based Feature Representation for Insider Threat Classification” which was mentioned briefly in the earlier portion of the article. They discuss the measures that they have had to create a feature set based on an extended amount of data provided by the same US-CERT CMU dataset and they created ‘images’ out of it that can be used for prediction classification where they achieved 98% accuracy.

显然,这是一项复杂的任务。 迪肯大学(Deakin University)的研究人员发表了一篇名为“ 基于图像的内部威胁分类的特征表示法 ”的文章,该文章在前面的部分中进行了简要介绍。 They discuss the measures that they have had to create a feature set based on an extended amount of data provided by the same US-CERT CMU dataset and they created \’images\’ out of it that can be used for prediction classification where they achieved 98% accuracy.

Within the paper the researchers also discussed examination of prior models such as ‘BAIT’ for insider threat which at best a 70% accuracy also using imbalanced data. Security programs with enough budget can have in-house models made from scratch with the help of data scientists and dev ops engineers that can use this research paper into applicable code.

Within the paper the researchers also discussed examination of prior models such as \’ BAIT \’ for insider threat which at best a 70% accuracy also using imbalanced data. Security programs with enough budget can have in-house models made from scratch with the help of data scientists and dev ops engineers that can use this research paper into applicable code.

How can cyber defenders get better at AI and begin to develop skills in house? (How can cyber defenders get better at AI and begin to develop skills in house?)

Focus less on the solution and more on the data science and pre-processing. I took the EdX Data8x Courseware (3 in total) and the book referenced (also free) provides great details and methods anyone can use to properly examine data and know what they’re looking at during the process. This course set and among others can really augment and enhance existing cyber security skills to prepare us to do things like:

Focus less on the solution and more on the data science and pre-processing. I took the EdX Data8x Courseware (3 in total) and the book referenced (also free) provides great details and methods anyone can use to properly examine data and know what they\’re looking at during the process. This course set and among others can really augment and enhance existing cyber security skills to prepare us to do things like:

- Evaluate vendors providing ‘AI’ enabled services and solutions on their actual effectiveness such as asking questions into what data pre-processing, feature sets, model architecture and optimization functions are used

Evaluate vendors providing \’AI\’ enabled services and solutions on their actual effectiveness such as asking questions into what data pre-processing, feature sets, model architecture and optimization functions are used

- Build use cases and augment their SOC or threat hunt programs with more informed choices of AI specific modeling on what is considered anomalous

Build use cases and augment their SOC or threat hunt programs with more informed choices of AI specific modeling on what is considered anomalous

- Be able to pipeline and automate high quality data into proven tried-and-true models for highly effective alerting and response

Be able to pipeline and automate high quality data into proven tried-and-true models for highly effective alerting and response

闭幕 (Closing)

I hope you’ve enjoyed this article and tutorial brief on cyber security applications of insider threats or really any data set into a neural network using two different solutions. If you’re interested in professional services or an MSSP to bolster your organization’s cyber security please feel free to contact us at www.scissecurity.com

I hope you\’ve enjoyed this article and tutorial brief on cyber security applications of insider threats or really any data set into a neural network using two different solutions. If you\’re interested in professional services or an MSSP to bolster your organization\’s cyber security please feel free to contact us at www.scissecurity.com

翻译自: https://towardsdatascience.com/insider-threat-detection-with-ai-using-tensorflow-and-rapidminer-studio-a7d341a021ba

rapidminer

爱站程序员基地

爱站程序员基地

![[翻译] Backpressure explained — the resisted flow of data through software-爱站程序员基地](https://aiznh.com/wp-content/uploads/2021/05/5-220x150.jpeg)