最近学习go,爬取网站数据用到正则表达式,做个总结;

Go中正则表达式采用RE2语法(具体是啥咱也不清楚);

字符

. ——匹配任意字符 e.g: abc. 结果: abcd,abcx,abc9;

[] ——匹配括号中任意一个字符 e.g: [abc]d 结果:ad,cd,1d;

– ——[-]中表示范围 e.g: [A-Za-z0-9];

^ ——[^]中表示除括号中的任意字符 e.g:[^xy]a 结果:aa,da,不能为xa,ya;

数量限定

? ——前面单元匹配0或1次;

+ ——前面单元匹配1或多次;

* ——前面单元匹配0或多次;

{,} ——显示个数上下线;e.g : ip地址——[0-9]{1,3}.[0-9]{1,3}.[0-9]{1,3}.[0-9]{1,3};

其他

\\ ——转义字符;

| ——条件或;

() ——组成单元 如果字符串本身有括号\”[(] aaa. [)]\” ;

方法

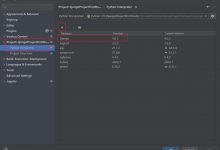

//参数正则字符串,返回值*Regexpstr := regexp.MustCompile(string)//参数要查找的数据,查找次数-1为全局,返回值二维数组,查找出的字符串+正则字符串var result [][]string = str.FindAllStringSubmatch(data, -1)

爬虫

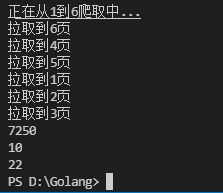

爬取博客园所有文章阅读量,评论,推荐;

package mainimport (\"fmt\"\"io\"\"net/http\"\"regexp\"\"strconv\")var readCount int = 0var commentCount int = 0var diggCount int = 0//http读取网页数据写入result返回func HttpGet(url string) (result string, err error) {resp, err1 := http.Get(url)if err1 != nil {err = err1return}defer resp.Body.Close()buf := make([]byte, 4096)for {n, err2 := resp.Body.Read(buf)//fmt.Println(url)if n == 0 {break}if err2 != nil && err2 != io.EOF {err = err2return}result += string(buf[:n])}return result, err}//横向纵向爬取文章标题数据,并累计数值func SpiderPageDB(index int, page chan int) {url := \"https://www.cnblogs.com/littleperilla/default.html?page=\" + strconv.Itoa(index)result, err := HttpGet(url)if err != nil {fmt.Println(\"HttpGet err:\", err)return}str := regexp.MustCompile(\"post-view-count\\\">阅读[(](?s:(.*?))[)]</span>\")alls := str.FindAllStringSubmatch(result, -1)for _, j := range alls {temp, err := strconv.Atoi(j[1])if err != nil {fmt.Println(\"string2int err:\", err)}readCount += temp}str = regexp.MustCompile(\"post-comment-count\\\">评论[(](?s:(.*?))[)]</span>\")alls = str.FindAllStringSubmatch(result, -1)for _, j := range alls {temp, err := strconv.Atoi(j[1])if err != nil {fmt.Println(\"string2int err:\", err)}commentCount += temp}str = regexp.MustCompile(\"post-digg-count\\\">推荐[(](?s:(.*?))[)]</span>\")alls = str.FindAllStringSubmatch(result, -1)for _, j := range alls {temp, err := strconv.Atoi(j[1])if err != nil {fmt.Println(\"string2int err:\", err)}diggCount += temp}page <- index}//主要工作方法func working(start, end int) {fmt.Printf(\"正在从%d到%d爬取中...\\n\", start, end)//channel通知主线程是否所有go都结束page := make(chan int)//多线程go程同时爬取for i := start; i <= end; i++ {go SpiderPageDB(i, page)}for i := start; i <= end; i++ {fmt.Printf(\"拉取到%d页\\n\", <-page)}}//入口函数func main() {//输入爬取的起始页var start, end intfmt.Print(\"startPos:\")fmt.Scan(&start)fmt.Print(\"endPos:\")fmt.Scan(&end)working(start, end)fmt.Println(\"阅读:\", readCad8ount)fmt.Println(\"评论:\", commentCount)fmt.Println(\"推荐:\", diggCount)}

爱站程序员基地

爱站程序员基地