[toc]

requests库

虽然Python的标准库中 urllib模块已经包含了平常我们使用的大多数功能,但是它的 API 使用起来让人感觉不太好,而 Requests宣传是 “HTTP for Humans”,说明使用更简洁方便;

Requests 是用Python语言编写,基于 urllib,但是它比 urllib 更加方便,可以节约我们大量的工作,完全满足 HTTP 测试需求;

安装

-

pip install requests

-

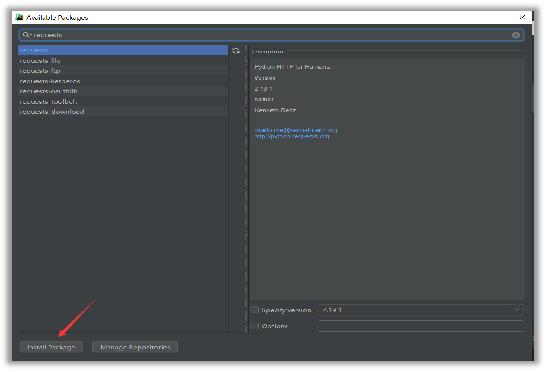

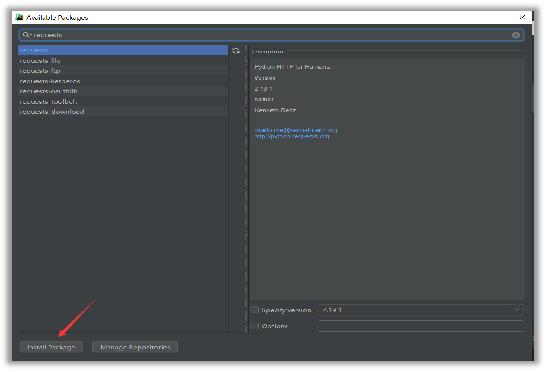

pycharm安装

更多高级用法参考官网文档:

- 中文文档:http://docs.python-requests.org/zh_CN/latest/index.html

部分源码

"""Requests HTTP Library~~~~~~~~~~~~~~~~~~~~~Requests is an HTTP library, written in Python, for human beings.Basic GET usage:>>> import requests>>> r = requests.get(\'https://www.python.org\')>>> r.status_code200>>> b\'Python is a programming language\' in r.contentTrue... or POST:>>> payload = dict(key1=\'value1\', key2=\'value2\')>>> r = requests.post(\'https://httpbin.org/post\', data=payload)>>> print(r.text){..."form": {"key1": "value1","key2": "value2"},...}The other HTTP methods are supported - see `requests.api`. Full documentationis at <https://requests.readthedocs.io>.:copyright: (c) 2017 by Kenneth Reitz.:license: Apache 2.0, see LICENSE for more details."""

通过源码我们可以发现,主要用法是GET请求和POST请求,介绍了查看状态码和查看文本等方法,其他HTTP请求方法查看

request.api

,常用的方法用法如下:

发送GET请求

URL 的查询字符串(query string)传递某种数据,我们可以通过

params

参数来传递,requests库不需要url编码,自动给我们编码处理

import requestsurl = "http://httpbin.org/get"payload = {\'key\':\'value\',\'key2\':\'value\'}r = requests.get(url,params=payload)print(r.text)print(r.url) # http://httpbin.org/get?key=value&key2=value

列表作为值传入:

payload = {\'key1\': \'value1\', \'key2\': [\'value2\', \'value3\']}r = requests.get(\'http://httpbin.org/get\', params=payload)print(r.url)http://httpbin.org/get?key1=value1&key2=value2&key2=value3

ps:注意字典里值为

None

的键都不会被添加到 URL 的查询字符串里。

import requests# 添加headers参数headers = {\'User-Agent\': \'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/73.0.3683.86 Safari/537.36\'}# https://www.baidu.com/s?&word=%E4%B8%AD%E5%9B%BDurl = \'https://www.baidu.com/s\'kw = {\'wd\':\'中国\'}# # params 接收一个字典或者字符串的查询参数,字典类型自动转换为url编码,不需要urlencode()response = requests.get(url,headers=headers,params=kw)print(response) # <Response [200]>\'\'\'更多属性方法\'\'\'# print(response.text) # 返回unicode格式的数据print(response.content) # 返回字节流数据print(response.status_code) # 200print(response.url) # https://www.baidu.com/s?wd=%E4%B8%AD%E5%9B%BD 查看完整url地址print(response.encoding) # utf-8

response.text和response.content的区别:

-

response.content

:这个是直接从网络上抓取的数据,没有经过任何的编码,所以是一个bytes类型,其实在硬盘上和网络上传输的字符串都是bytes类型

-

response.text

:这个是str的数据类型,是requests库将response.content进行解码的字符串,解码需要指定一个编码方式,requests会根据自己的猜测来判断编码的方式,所以有时候可能会猜测错误,就会导致解码产生乱码,这时候就应该进行手动解码,比如使用

response.content.decode(\'utf8\')

发送POST请求

r = requests.post(\'http://httpbin.org/post\', data = {\'key\':\'value\'})

import requestsurl = \'https://i.meishi.cc/login.php?redirect=https%3A%2F%2Fwww.meishij.net%2F\'headers={\'User-Agent\':\'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/73.0.3683.86 Safari/537.36\'}data = {\'redirect\': \'https://www.meishij.net/\',\'username\': \'[email protected]\',\'password\': \'wq15290884759.\'}resp = requests.post(url,headers=headers,data=data)print(resp.text)

Requests 简便的 API 意味着所有 HTTP 请求类型都是显而易见的,那么其他 HTTP 请求类型:PUT,DELETE,HEAD 以及 OPTIONS 又是如何的呢?都是一样的简单:

>>> r = requests.put(\'http://httpbin.org/put\', data = {\'key\':\'value\'})>>> r = requests.delete(\'http://httpbin.org/delete\')>>> r = requests.head(\'http://httpbin.org/get\')>>> r = requests.options(\'http://httpbin.org/get\')

requests使用代理

只要在请求的方法中(比如get或者post)传递proxies参数就可以了

import requestsproxy = {\'http\':\'111.77.197.127:9999\'}url = \'http://www.httpbin.org/ip\'resp = requests.get(url,proxies=proxy)print(resp.text)

Cookie

如果在一个响应中包含了cookie,那么可以利用cookies属性拿到这个返回的cookie值

import requestsresp = requests.get(\'http://www.baidu.com/\')print(resp.cookies)print(resp.cookies.get_dict())

Cookie模拟登录

import requestsurl = \'https://www.zhihu.com/hot\'headers = {\'User-Agent\': \'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/73.0.3683.86 Safari/537.36\',\'cookie\':\'_zap=59cde9c3-c5c0-4baa-b756-fa16b5e72b10; d_c0="APDi1NJcuQ6PTvP9qa1EKY6nlhVHc_zYWGM=|1545737641"; __gads=ID=237616e597ec37ad:T=1546339385:S=ALNI_Mbo2JturZesh38v7GzEeKjlADtQ5Q; _xsrf=pOd30ApWQ2jihUIfq94gn2UXxc0zEeay; q_c1=1767e338c3ab416692e624763646fc07|1554209209000|1545743740000; tst=h; __utma=51854390.247721793.1554359436.1554359436.1554359436.1; __utmc=51854390; __utmz=51854390.1554359436.1.1.utmcsr=zhihu.com|utmccn=(referral)|utmcmd=referral|utmcct=/hot; __utmv=51854390.100-1|2=registration_date=20180515=1^3=entry_date=20180515=1; l_n_c=1; l_cap_id="OWRiYjI0NzJhYzYwNDM3MmE2ZmIxMGIzYmQwYzgzN2I=|1554365239|875ac141458a2ebc478680d99b9219c461947071"; r_cap_id="MmZmNDFkYmIyM2YwNDAxZmJhNWU1NmFjOGRkNDNjYjc=|1554365239|54372ab1797cba8c4dd224ba1845dd7d3f851802"; cap_id="YzQwNGFlYWNmNjY3NDFhNGI4MGMyYjZjYjRhMzQ1ZmE=|1554365239|385cc25e3c4e3b0b68ad5747f623cf3ad2955c9f"; n_c=1; capsion_ticket="2|1:0|10:1554366287|14:capsion_ticket|44:MmE5YzNkYjgzODAyNDgzNzg5MTdjNmE3NjQyODllOGE=|40d3498bedab1b7ba1a247d9fc70dc0e4f9a4f394d095b0992a4c85e32fd29be"; z_c0="2|1:0|10:1554366318|4:z_c0|92:Mi4xOWpCeUNRQUFBQUFBOE9MVTBseTVEaVlBQUFCZ0FsVk5iZzJUWFFEWi1JMkxnQXlVUXh2SlhYb3NmWks3d1VwMXRB|81b45e01da4bc235c2e7e535d580a8cc07679b50dac9e02de2711e66c65460c6"; tgw_l7_route=578107ff0d4b4f191be329db6089ff48\'}resp = requests.get(url,headers=headers)print(resp.text)

Session:共享cookie

使用requests,也要达到共享cookie的目的,那么可以使用requests库给我们提供的session对象;

注意:这里的session不是web开发中的那个session,这个地方只是一个会话的对象而已

import requests# 登录链接post_url = \'https://i.meishi.cc/login.php?redirect=https%3A%2F%2Fwww.meishij.net%2F\'post_data = {\'username\':\'[email protected]\',\'password\':\'wq15290884759.\'}headers={\'User-Agent\':\'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/73.0.3683.86 Safari/537.36\'}# 登录# 通过session方法创建一个会话对象session = requests.session()# 发送post请求,携带登录数据session.post(post_url,headers=headers,data=post_data)\'\'\'有了cookie信息,访问个人网页\'\'\'url = \'https://i.meishi.cc/cook.php?id=13686422\'resp = session.get(url)print(resp.text)

处理不信任的SSL证书:

对于那些已经被信任的SSL证书的网站,比如https://www.baidu.com/,那么使用requests直接就可以正常的返回响应;

如果是自签证书,那么浏览器是不承认该证书的,提示不安全,那么在爬取的时候会报错,需要怎么处理?添加

verify=False

参数

示例代码如下:

resp = requests.get(\'https://inv-veri.chinatax.gov.cn/\',verify=False)print(resp.content.decode(\'utf-8\'))

? Requests 2.18.1 文档 (python-requests.org)

爱站程序员基地

爱站程序员基地